Home Assistant Core 2024.6 has been released. This update introduces several new features, with a focus on AI integration, media player commands, data table improvements, tag entities, and collapsible blueprint sections. Notable improvements in dashboarding include the ability to conditionally display sections and cards.

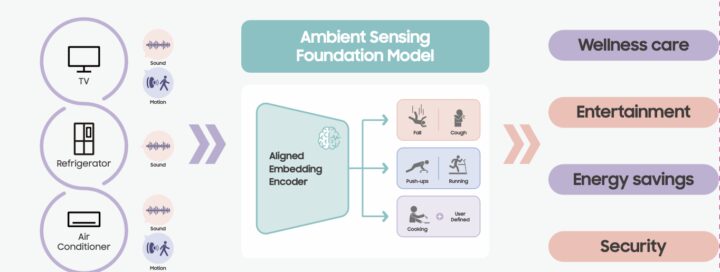

In this release, users can now control their home using AI through LLM-based (Large Language Model) conversation agents, such as those from OpenAI and Google AI. This new setting allows AI agents to access the intent system powering Assist, providing control over exposed entities and enabling complex command processing.

Voice assistant capabilities have also been enhanced, with improvements for media control and additional developments in AI integration. A Voice – Chapter 7 livestream is scheduled for June 26 to provide further details.

The release includes recommended model settings for LLMs to optimize for accuracy, speed, and cost, with Google AI being more cost-effective and OpenAI excelling in non-smart home queries. Local LLMs via the Ollama integration are mentioned, with ongoing collaboration with NVIDIA for future improvements.

We’re excited about this release, but we’ll be even more excited when we are able to use this new feature with locally hosted LLM models.

Check out the Release Party recording for demo’s of this new feature and others!

For more information, you can view the Home Assistant 2024.6 release announcement here.